Description

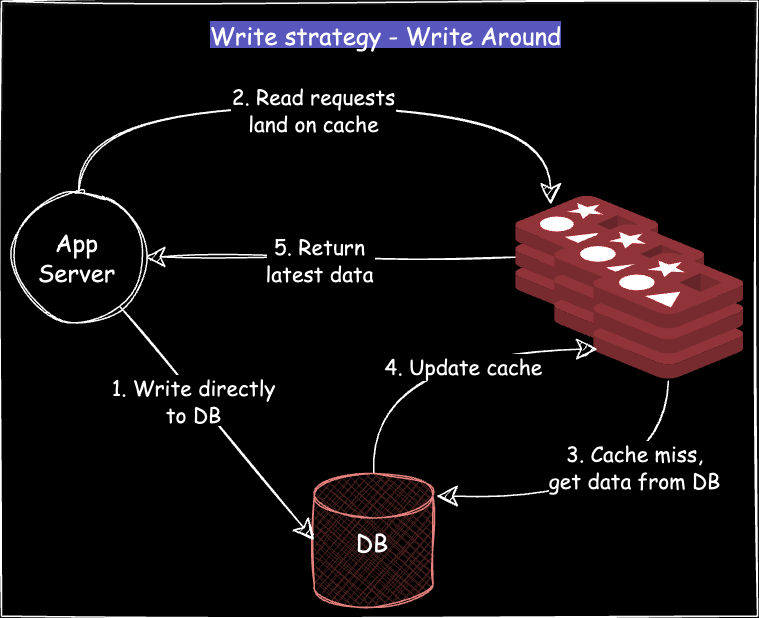

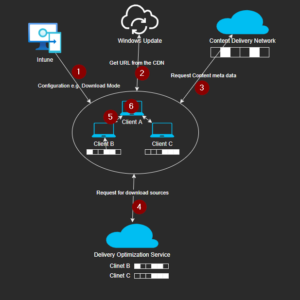

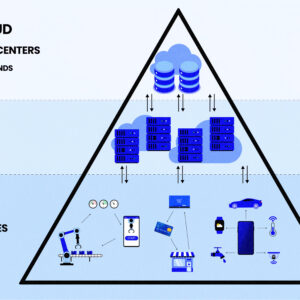

Our Distributed Cache Management service focuses on architecting and managing in-memory cache systems that operate across regions or edge nodes to dramatically reduce latency and backend load. Using technologies like Redis Cluster, Redis Enterprise, Memcached, and Hazelcast, we deploy caching solutions suited for real-time applications such as ad serving, gaming leaderboards, e-commerce recommendations, and session stores. We support cache sharding, replication, pub/sub messaging, and persistence strategies for fault tolerance. Edge caching is configured to minimize round-trips to centralized data centers and handle frequent reads with low overhead. We implement intelligent eviction policies (LRU, LFU), monitoring (Prometheus, Grafana), and failover policies for high availability. Integration with CDNs, microservices, and APIs enables seamless performance improvement at scale. Whether you’re building a geo-distributed app or IoT platform, our caching systems ensure data is always close to where it’s needed.

Babangida –

The IT Services team significantly improved our application performance through their distributed cache management. We saw a noticeable reduction in latency and improved responsiveness, especially during peak usage. Their expertise in configuring and managing the caching layer at our edge locations has been invaluable in scaling our platform efficiently and minimizing cloud costs. The transition was seamless, and we’re extremely pleased with the positive impact on our user experience.

Falilat –

The team significantly improved our application’s performance with their distributed cache management solution. We saw a noticeable reduction in latency and improved responsiveness, particularly during peak traffic periods. Their expertise in configuring and managing the caching layer was invaluable in optimizing our infrastructure for speed and efficiency. We are very pleased with the results.

Barnabas –

The distributed cache management service has significantly improved our application performance. We’ve seen a noticeable decrease in latency and a substantial reduction in database load, especially during peak traffic periods. The team’s expertise in configuring and maintaining the caching layer across our various edge locations has been invaluable in ensuring a smooth and responsive user experience. This has made a real difference in our ability to serve our customers effectively.

Ishaku –

The team’s expertise in distributed cache management has been instrumental in significantly improving our application’s performance. They efficiently implemented a Redis layer across our edge locations, resulting in faster data retrieval times and a noticeable reduction in cloud calls. This has led to a much more responsive experience for our users, particularly during peak usage periods. Their proactive approach and understanding of our needs made the entire process smooth and successful.

Ogochukwu –

The team’s distributed cache management solution has been instrumental in significantly improving our application’s performance and scalability. Data retrieval is noticeably faster, reducing latency for our users and decreasing the load on our cloud infrastructure. We’ve seen a tangible difference in app responsiveness and a substantial cost saving due to fewer cloud calls. Their expertise and proactive approach to management have made a real impact on our business.